Observing how users troubleshoot in 3D

Organization

Safety for industrial automation and robotics | Professional project

Activities

Contextual inquiry | Observation guide design | Qualitative analysis | Review of analogous products

Summary

This project began as just UX design, but through task analysis I determined that user research was needed to sufficiently determine the feature requirements needed to complete the design work. With limited time and resources, I ran a small internal study and was able to discover the workflows and tools that users needed for troubleshooting. I proposed to the product team three different approaches to the feature with different scopes. Given the limited potential impact for users, one of these approaches was to not build the feature until it could be adequately addressed. This option was selected by the product team, saving approximately one week of critical development and design time.

Learning about the feature

Before beginning design work as part of the original task, I worked with the product designer to better understand the feature, such as the organization's goals and rationale for the feature as well as the resource limitations. It turned out that development time was a major constraint. Although the rationale was clear, it was not clear how this should translate into a design that could be executed quickly and in a meaningful way.

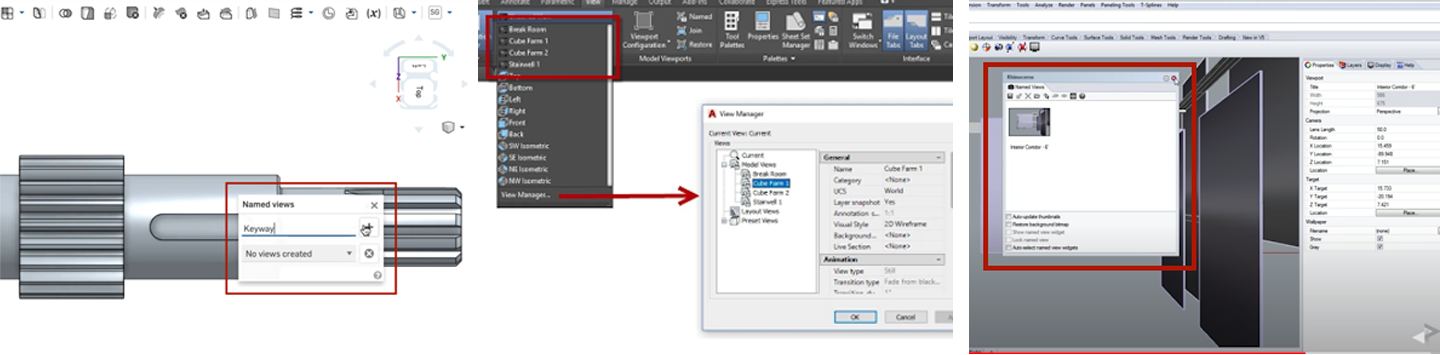

What do other products do?

To establish a design framework I reviewed other 3D applications with similar features to understand relevant information, design conventions for workflows, and user needs. This comparison surfaced some relevant information, but it also highlighted that the user goals for our application differed from these other applications and that we did not fully understand the use cases. Working with the product design team, we determined that the feature would be used primarily during troubleshooting operations.

Asking questions

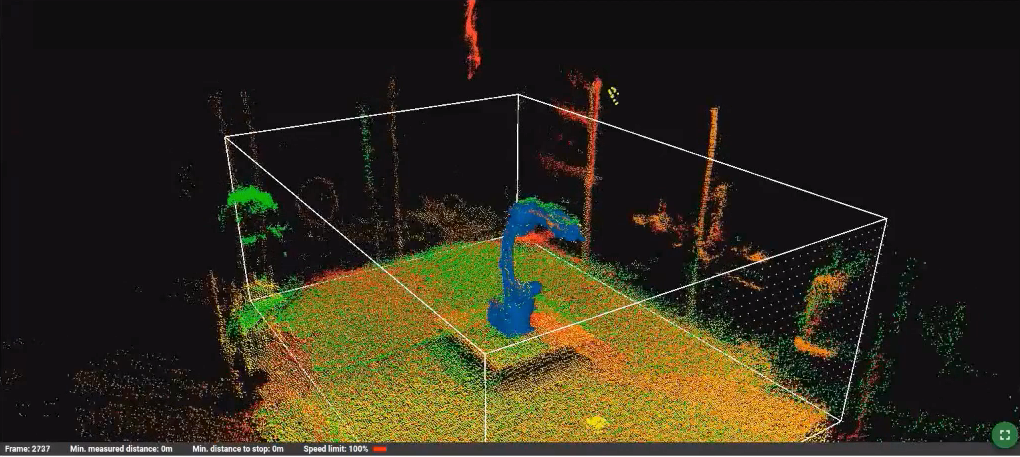

Once the user and the context were determined, we still needed to describe the use cases. How do users currently troubleshoot the system? What other information do they seek? What other challenges do they face while troubleshooting? Answering these questions required user research and I opted to conduct a contextual inquiry to observe users troubleshooting with the system. This approach would allow me to observe how they interacted with the actual space and the 3D space on the screen as well as learn what they were thinking about while they worked.

Advocating research

Given the time and resource constraints as well as the nature of the product (pre-launch, for domain experts) all participants were internal users with experience troubleshooting the system. Although this was not representative of our end-users, it would still provide the necessary insights into the troubleshooting process that we could not get through speculation.

Running the sessions & insights

After running a pilot session, I conducted four 1-hr long interviews on-site with an active safety system and robot. Participants were asked to complete a series of think-aloud tasks as well as discuss experiences and pain points. All sessions were recorded, including the participant’s screen. Running the studies on-site allowed me to observe how participants moved around the space and their body language such as looking toward the robot and using their fingers to make a coordinate axis as a visual aid.

Findings

Over the next couple of days, I coded (and recoded) observations into findings, which I grouped into categories relevant to the initial questions. Although the sample size was small, behavior was very consistent, providing a clear understanding of the tools and workflows needed to support users. Some of these findings aligned with the results of the initial analogous product research. However, there were also unexpected behaviors related to the system requirements that indicated a need for a certain level of sophistication from the feature.

Scoping recommendations

Working with the product design team we prioritized findings against engineering effort. I was then able to propose three alternative approaches to the feature for the product acceptance committee to review. They included:

1. Building the feature with all the supporting tools to meet the user needs as observed during the sessions.

2. Building a minimal viable version of the feature, that would only meet the most rudimentary of the user’s needs.

3. Eliminating the feature given that users are able to complete all necessary tasks without the feature and re-visit it during future releases as time allows.

Impact

The committee opted to eliminate the feature, saving approximately a week of development and design time. In a subsequent release (when schedule allowed for the work) the feature was revisited and the minimal version was implemented.

The research had additional benefits beyond the specific feature, including providing deeper institutional knowledge about system use and surfacing pain points to be addressed with future work.